MCP: the Standard that Lets Your AI Plug Into Everything

When Wi-Fi and Bluetooth first appeared, they solved one brutal problem: every gadget spoke a different language. Suddenly there was a single standard and innovation took off. Artificial-intelligence development is now at a similar crossroads. Modern AI agents need live market feeds, GitHub repos, private databases, smart-contract calls, even your local file system—yet every integration has required custom glue code.

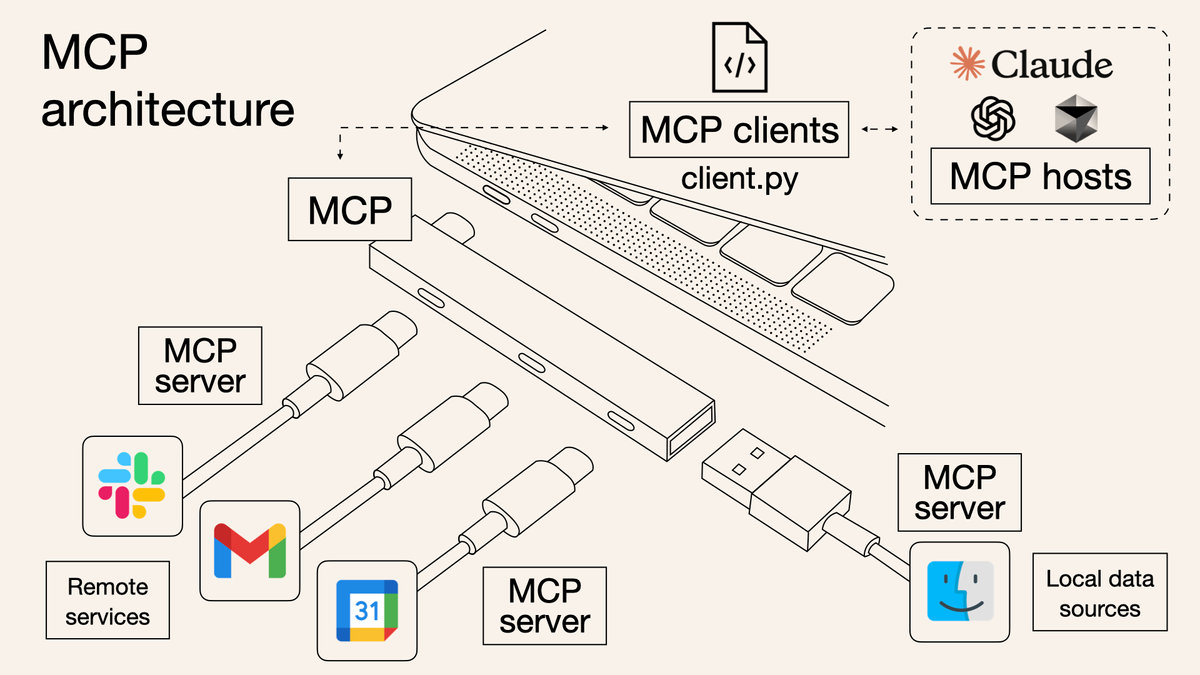

In November 2024 Anthropic introduced Model Context Protocol (MCP) as that missing universal adapter. Think of MCP as USB-C for AI: one cable, many peripherals. Below we unpack how MCP works, the problems it solves for decentralized / open source AI projects.

Understanding MCP

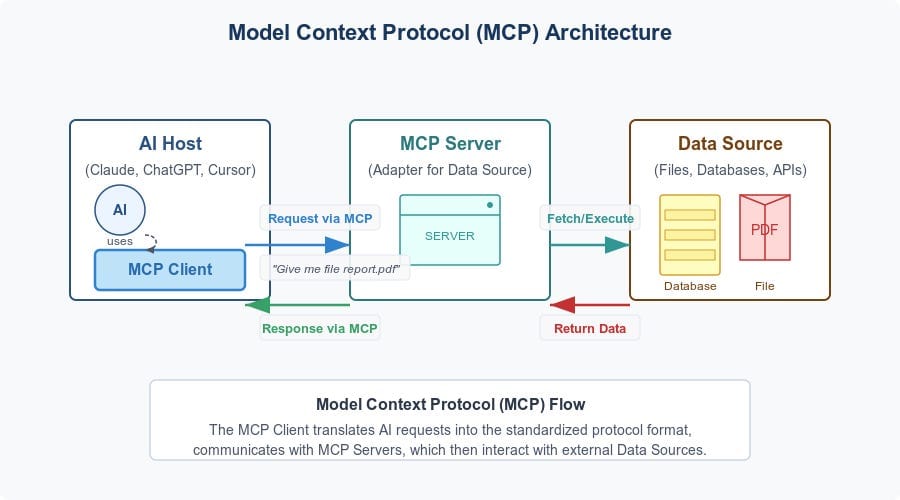

MCP is a state-ful, bi-directional protocol that lets an AI model exchange context with any external system through a set of small “servers.” Each server advertises the data or tool it can expose—SQL queries, Jira tickets, Solidity contracts, you name it—using a common schema. The model (or the user application that hosts the model) can then call those servers just as a browser calls an HTTPS endpoint. MCP follows the traditional client-server model that consists of a Client and a Server communicating over the protocol.

At its core, MCP is a protocol that enables bi-directional communication between AI models and the outside world. Rather than requiring custom integration code for every new data source or tool, MCP provides a universal language for interaction.

The Architecture Explained

- MCP Host – The place where a user chats with the model (Claude Desktop, a VS Code plug-in, a Gaia node, etc.).

- MCP Client – Tiny shim that translates the model’s JSON into the wire format MCP servers expect.

- MCP Server – Wrapper sitting in front of a tool or dataset; enforces auth, rate limits, and returns JSON spec responses.

How MCP Actually Works

When an AI model needs information or wants to perform an action, the workflow generally follows these steps:

- Request Initiation: The AI model, operating within the MCP host, generates a request for information or action.

- Client Processing: The MCP client receives this request and determines which MCP server can fulfill it.

- Server Communication: The client forwards the request to the appropriate MCP server, which then interfaces with the actual data source or service.

- Response Flow: The server returns the requested information or confirmation of action back through the client to the AI model.

- Context Integration: The AI model incorporates this new information into its reasoning process and continues the interaction.

Because the connection is state-ful, an AI agent can build a running memory (“I’ve already fetched PR #42, next fetch unit-tests”) instead of re-sending everything each turn. Because servers live behind their own trust boundary, we get granular permissioning by API key or wallet address—vital for Web3. The team behind MCP provided a Software Development Kit (SDK) that users can use to build their own MCP servers and clients. There is support for languages like TypeScript, Python, Java, Kotlin, and many more. A quick look at what an MCP server looks like using the SDK is available below. Below is a simple MCP server that provides a calculator tool:

N.B.: MCP is stateful and allows for creating a server with Stdio or Server Sent Event (SSE) support. Learn more here.

Why It Matters for Decentralized AI

One Protocol, Many Chains

A typical Web3 dev juggles Ethereum RPC, an Arweave gateway, IPFS pinning, and some SQL analytics service. With MCP you ship one client library; every chain-specific wrapper lives behind its own server. Your agent can ask for “tokenTransferHistory(wallet)” without knowing whether the server pulled from Etherscan or a Starknet archive node.Gaia fits in here perfectly. Gaia has nodes that can use their own compute to run models and expose configuration for users of these nodes to customize as much as they want. An MCP integration with Gaia would provide builders with a way to run and use every Gaia node as an MCP client and server with all open-source AI models available within the suite of models and add configurations suitable according to the users’ needs.

Real Autonomy

Autonomous agents are more than clever chatbots; they need to act. MCP servers can expose actions (e.g., submitPullRequest, sendUSDC). That means an MPC-secured multisig or a Gaia tool-calling LLM can actually push code, trade, or launch governance proposals in a single loop.

Security by Boundary

Because each server is its own micro-service, secrets never bleed into the model prompt. A “DeFi-prices” server might offer read-only endpoints, while a “Treasury-tx” server requires wallet-signature headers. This compartmentalization is far easier to audit than giant monolithic plug-ins.

Composability & Community

Web3 thrives on composability: DeFi legos, NFT composables, roll-up as a service. MCP brings the same mindset to AI—anyone can publish a server on GitHub, register it in a directory, and other builders can snap it into their workflow.

Spotlight on OpenMCP.app

Member of Gaia’s leadership team and open source pioneer Michael Yuan (Second State, WebAssembly, DeAI researcher) built OpenMCP.app to make server creation almost copy-paste. Point it at any REST or GraphQL API, add a small YAML manifest, and OpenMCP generates:

- The MCP server wrapper

- JSON schema for all available methods

- A local playground to test calls and estimate token cost (critical for LLM budgets)

Example project: PR-Reviewer (GitHub). Feed it a repo path and an LLM; the server exposes listOpenPRs, summarizeDiff, suggestReview. Any MCP-ready agent can suddenly do code-reviews across thousands of projects—perfect fit for open-source maintenance or hackathon judging.

This MCP service has two tools for GitHub Pull Request analysis: review generates a markdown review, and content retrieves the file and patch content. Finally, this MCP server can be integrated with any MCP client (for example, Claude for desktop) by configuring the claude_desktop_config.json file to access the server via SSE.

Conclusion

Major companies are rapidly adopting the MCP standard, suggesting it may become as fundamental to AI as HTTP is to the web. As with any new standard, MCP faces some challenges such as ensuring widespread adoption across different AI systems while managing security and performance at scale. There are plenty of opportunities,

- Creating new markets for specialized MCP servers

- Democratizing access to powerful AI capabilities

- Enabling more complex and capable AI agents

- Bridging traditional and decentralized systems

Sources

Official MCP documentation:

- modelcontextprotocol.io/docs/concepts/architecture

- modelcontextprotocol.io/introduction

Technical explanations:

- "What is Model Context Protocol (MCP): Explained" - Composio (composio.dev)

- "Model Context Protocol (MCP) an overview" by Philipp Schmid (philschmid.de)

- "MCP 101: An Introduction to Model Context Protocol" - DigitalOcean

- "The Model Context Protocol (MCP): A Complete Tutorial" - Medium article

Industry analysis:

- "MCP: The new 'USB-C for AI' that's bringing fierce rivals together" - Ars Technica

- "A Deep Dive Into MCP and the Future of AI Tooling" - Andreessen Horowitz (a16z.com)

- "What is MCP and Why It's Critical for the Future of Multimodal AI" - Yodaplus

Implementation insights:

- "Model Context Protocol (MCP): A Guide With Demo Project" - DataCamp

- "The Model Context Protocol (MCP): The Ultimate Guide" - Medium

- "What is Model Context Protocol (MCP)? How it simplifies AI integrations" - norahsakal.com